Scalability Issues in Large-Scale Machine Learning Projects

Introduction

Large-scale machine learning projects have become increasingly common in various industries, as organizations strive to leverage the power of data to gain valuable insights and make informed decisions. However, as the size and complexity of these projects grow, scalability issues often arise, posing significant challenges to the successful implementation and execution of machine learning algorithms.

In this blog post, we will explore some of the key scalability issues that can arise in large-scale machine learning projects. We will discuss the impact of these issues on project performance, the potential causes behind them, and strategies to address and mitigate these challenges.

1. Data Volume

One of the primary challenges in large-scale machine learning projects is dealing with massive amounts of data. As the volume of data increases, it becomes more difficult to process and analyze efficiently. This can lead to longer training times and increased computational requirements.

1.1 Data Preprocessing

Data preprocessing plays a crucial role in addressing scalability issues related to data volume. Techniques such as data sampling, dimensionality reduction, and feature selection can help reduce the size of the dataset without sacrificing important information. Additionally, distributed computing frameworks like Apache Hadoop and Spark can be utilized to process data in parallel, improving scalability.

2. Model Complexity

Large-scale machine learning projects often involve complex models that require significant computational resources. As the complexity of the model increases, scalability issues arise due to the increased time and resources required for training and inference.

2.1 Model Optimization

To address scalability issues related to model complexity, optimization techniques can be employed. This includes using more efficient algorithms, reducing the number of parameters, and implementing distributed training frameworks. Additionally, techniques like model compression and quantization can help reduce the memory footprint of the model, improving scalability.

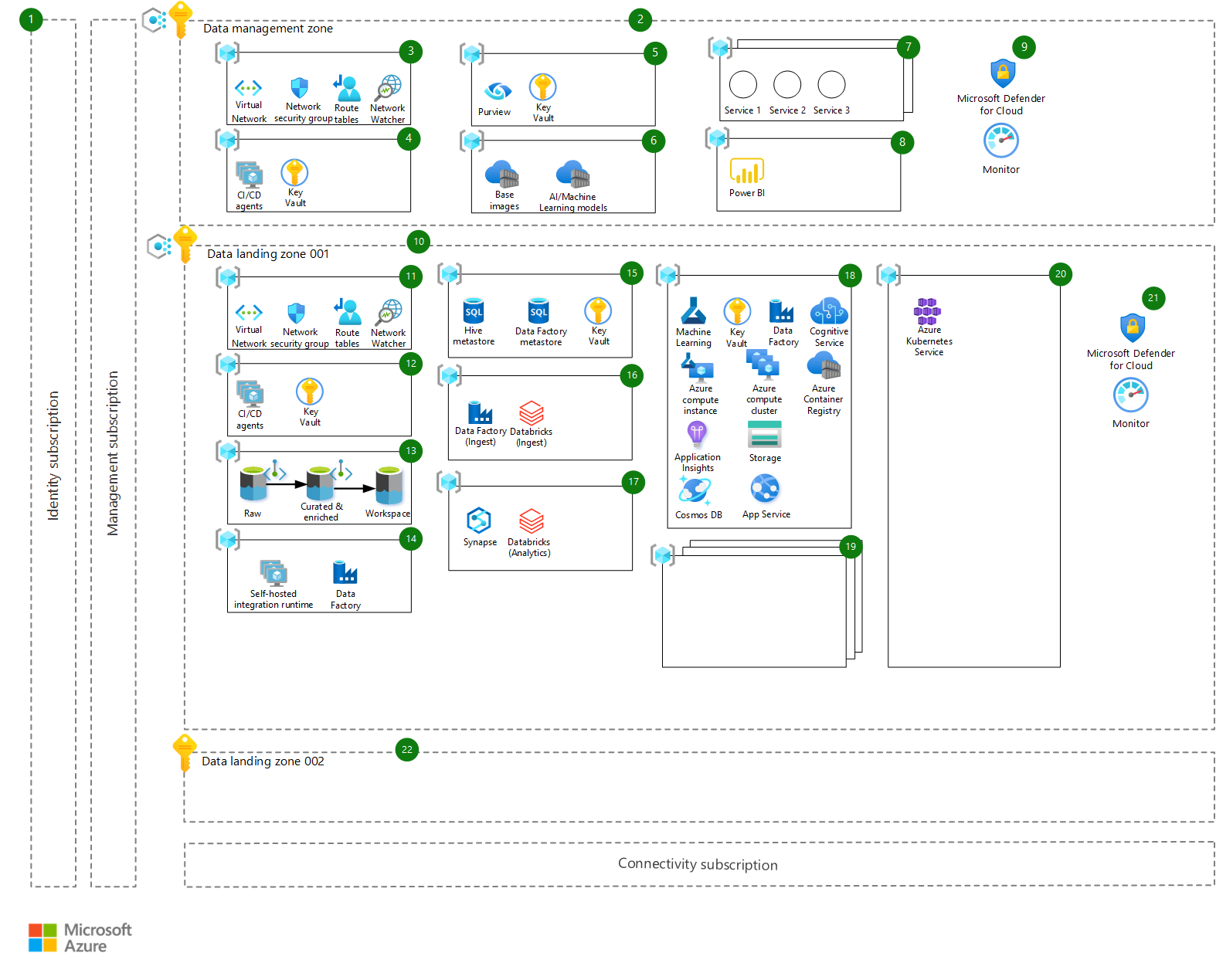

3. Distributed Computing

Large-scale machine learning projects can benefit from distributed computing frameworks that allow for parallel processing and distributed storage. These frameworks enable the efficient utilization of resources and help overcome scalability challenges.

3.1 Parallel Processing

Parallel processing techniques, such as data parallelism and model parallelism, can be employed to distribute the workload across multiple machines or processors. This allows for faster training and inference times, improving scalability.

3.2 Distributed Storage

Distributed storage systems, like Apache Hadoop Distributed File System (HDFS).

Summary

Scalability issues in large-scale machine learning projects can hinder the efficiency and effectiveness of data analysis and model training processes. These issues can manifest in various ways, such as increased computational requirements, longer processing times, and decreased model accuracy.

One common scalability issue is the inability to handle large volumes of data. As datasets grow in size, traditional machine learning algorithms may struggle to process and analyze the data within reasonable timeframes. This can lead to delays in model training and hinder real-time decision-making.

Another challenge is the scalability of algorithms themselves. Some machine learning algorithms are not designed to handle large-scale datasets efficiently. As a result, the computational resources required to train and deploy these algorithms can become prohibitively expensive, limiting their practicality in large-scale projects.

Furthermore, the scalability of infrastructure and resources is crucial in large-scale machine learning projects. Inadequate hardware, network limitations, and insufficient storage capacity can all contribute to scalability issues. It is essential to ensure that the infrastructure can handle the computational demands and storage requirements of the project.

To address scalability issues, various strategies can be employed. These include distributed computing frameworks, such as Apache Spark, which enable parallel processing and distributed storage to handle large-scale datasets effectively. Additionally, feature engineering techniques, data sampling, and model compression can help reduce computational requirements and improve scalability.

In conclusion, scalability issues are a common challenge in large-scale machine learning projects. Understanding the causes and implementing appropriate strategies can Read Full Article help overcome these challenges and ensure the successful implementation and execution of machine learning algorithms in large-scale environments.

- Q: What are scalability issues in large-scale machine learning projects?

- A: Scalability issues in large-scale machine learning projects refer to challenges that arise when trying to process and analyze massive amounts of data efficiently and effectively.

- Q: What are the common causes of scalability issues in large-scale machine learning projects?

- A: Common causes of scalability issues include inadequate computational resources, inefficient algorithms, data storage and retrieval bottlenecks, and limitations in distributed computing frameworks.

- Q: How can inadequate computational resources impact scalability in machine learning projects?

- A: Inadequate computational resources can lead to slow processing times, inability to handle large datasets, and increased training and inference times, hindering the scalability of machine learning projects.

- Q: What role do inefficient algorithms play in scalability issues?

- A: Inefficient algorithms can significantly impact scalability by requiring excessive computational power and memory, making it difficult to process large-scale datasets within reasonable timeframes.

- Q: How do data storage and retrieval bottlenecks affect scalability?

- A: Data storage and retrieval bottlenecks can limit the speed at which data can be accessed, impacting the overall performance and scalability of machine learning projects that rely on quick data processing.

- Q: What limitations in distributed computing frameworks can cause scalability issues?

- A: Distributed computing frameworks may have limitations in terms of data partitioning, communication overhead, and load balancing, which can hinder the scalability of machine learning projects that rely on distributed processing.

- Q: How can scalability issues be addressed in large-scale machine learning projects?

- A: Scalability issues can be addressed by optimizing algorithms, utilizing distributed computing frameworks, employing parallel processing techniques, and leveraging cloud computing resources to handle large datasets and increase computational power.

Hello, I’m Brayden Denman, a passionate and experienced Mobile App Developer specializing in Cloud Computing, Software Development, Mobile App Integration, and AI & Machine Learning. With a strong background in these fields, I strive to create innovative and user-friendly solutions that meet the ever-evolving needs of businesses and individuals.

Introduction Large-scale machine learning projects have become increasingly common in various industries, as organizations strive to leverage the power of data to gain valuable insights and make informed decisions. However, as the size and complexity of these projects grow, scalability issues often arise, posing significant challenges to the successful implementation and execution of machine learning…

Hello, I’m Brayden Denman, a passionate and experienced Mobile App Developer specializing in Cloud Computing, Software Development, Mobile App Integration, and AI & Machine Learning. With a strong background in these fields, I strive to create innovative and user-friendly solutions that meet the ever-evolving needs of businesses and individuals. Read More.

Recent Posts

- Challenges in Cross-Platform Digital Asset Search and Retrieval

- The Role of AI in Enhancing Digital Asset Retrieval

- Implementing Automation in Digital Asset Recovery Processes

- Digital Asset Retrieval: Addressing Security and Privacy Concerns

- Optimizing Metadata for Quicker Digital Asset Discovery

- Overcoming Barriers in Searching and Accessing Digital Assets

- Best Practices for Efficient Digital Asset Retrieval

- Harnessing the Power of Deep Learning: A Beginner’s Guide

- Scalability Issues in Large-Scale Machine Learning Projects

- Navigating the Ethical Challenges of AI Implementations